Salesforce Integration Approaches

Salesforce Integration Approaches

Most if not all enterprise Salesforce projects involve an element of integration. In this article I have summarised 3 common integration use cases that come up and an optimal approach for solving those use cases. This is useful for the CTA exam when certain scenarios come up and you have to consider possible approaches that can be used to solve them.

Pattern 1 - Remote Process Invocation - Request Reply

Scenario

In this scenario we want to make a callout from Salesforce to a Remote System and receive an immediate response. For example, we want to make a callout from Salesforce to SAP to check on Stock for a particular Product chosen on a Salesforce opportunity. We want the stock check results to be displayed in real time.

Approach

- Create a Lightning Component which has an Apex Controller that can make a REST or SOAP callout to an external service. Create a button on the Lightning Component to invoke the callout method in your Apex Controller.

- In the Apex Controller, use the Apex Continuation Framework to make the callout real-time but asyhnchronous.

- Using the Apex Continuation Framework ensure tht you do not fall foul of the 'long running transaction limit' in Salesforce.

- The Long Running Transaction Limit applies for any transaction that takes longer than 5 seconds to complete. If you have more than 10 synchronous transactions, executed at the same time which take longer than 5 seconds to complete, then the 11th transaction will fail.

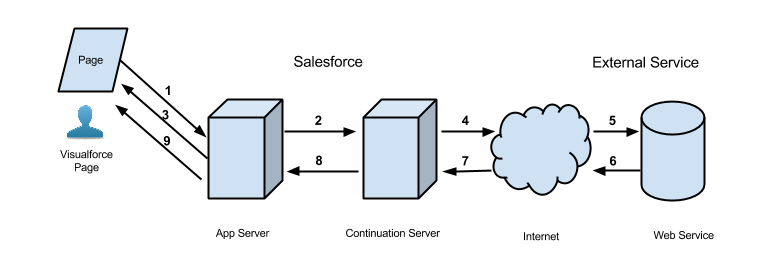

Apex Continuation Framework

|

| Picture Courtesy: Salesforce.com |

- User invokes an action on a Lightning Component or VF Page, which invokes an method in the Apex Controller.

- App Server receives the request and adds the request to the Continuation Server

- Control is returned back to the user on the page

- Steps 4 to 7 involve the callout being made to the external service, and the response being returned

- The Continuation Server provides the response back to the Apex Class, which invokes the callback method to provide the response to the User on the page.

- The key thing to note here is that the user was not waiting for the response to arrive, making this callout real-time but truly asynchronous.

- You can make 3 asyhchronous callouts as part of a single continuation.

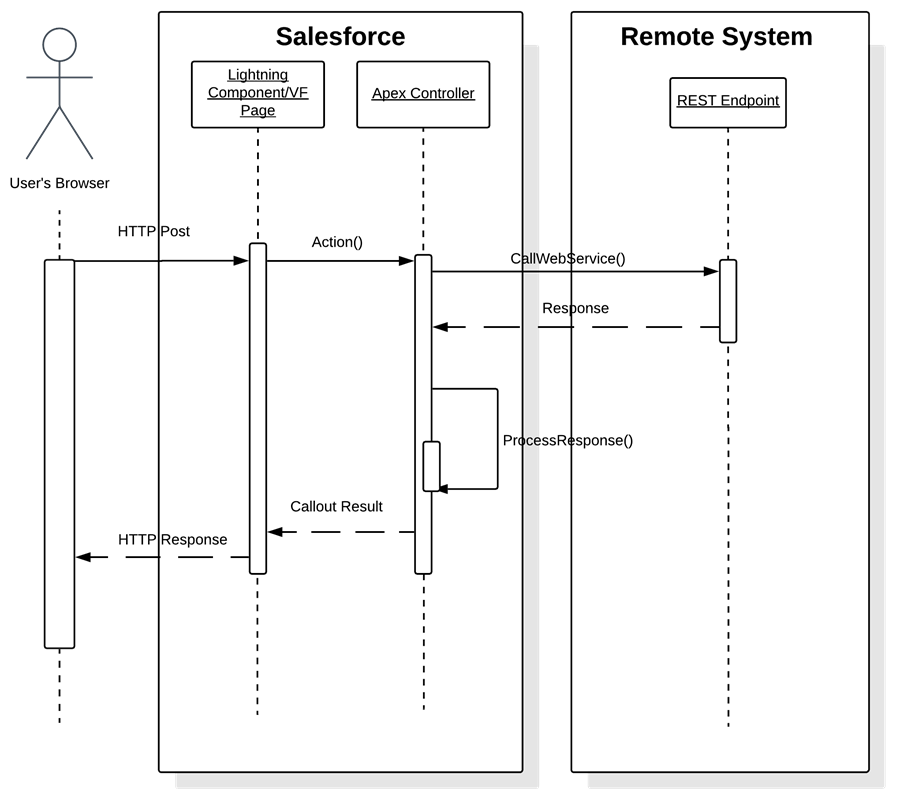

Sequence Diagram

The diagram below shows the sequence of execution for this callout mechanism.

|

| Picture Courtesy: Salesforce.com |

Pattern 2 - Remote Process Invocation - Fire & Forget

Scenario

In this Scenario we want to send some data from Salesforce to another system but the user does not need to wait for the data to reach the target system, they are happy to proceed. An example would be a user creating a new Account in Salesforce, and upon the Account being saved, we want to kick off a process that will create this new Account in SAP. Once SAP has created a Customer Record we want a SAP Customer ID to be returned back to the Account record in Salesforce.

Approach

- Leverage a notifier pattern, instead of a data carrier pattern.

- Create a Platform Event called Account_Integration_Event__e

- The Platform Event can have fields that capture the Salesforce Account Id, the date the account was modified and also the event that occured: 'Create' or 'Update'

- The message format above acts as more of a notifier rather than a data carrier.

- Create a Process Builder when publishes a new Platform Event onto the Event bus, whenever the Account record is Created/Edited.

- Leverage a middleware solution such a Mulesoft that can subscribe to this platform event and make a 'Remote Call In' back into Salesforce to retrieve the Account Details and then send them on to SAP.

- Once SAP has completed account creation, we take the generated SAP Customer ID, and update the Salesforce Account record with the SAP Customer ID.

- This requires a middleware solution and a Process Orchestration capability to coordinate the information retrieval from Salesforce, write in SAP, and update of the Account record in Salesforce.

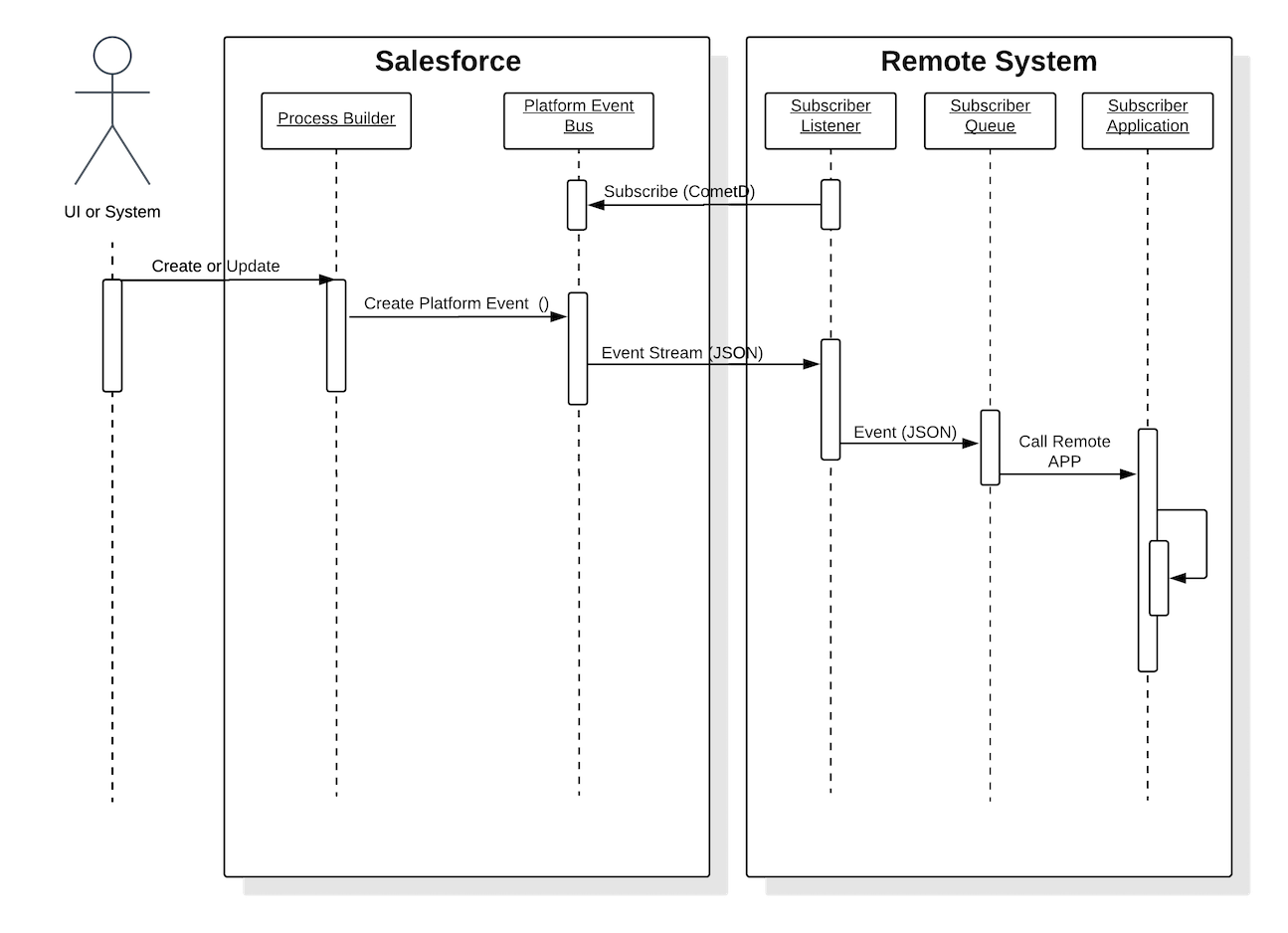

Sequence Diagram

|

| Picture Courtesy: Salesforce.com |

Platform Event Pointers

Platform Events offer a great Declarative Means of doing an integration and starting an integration process. By leveraging a publish-subscribe model we are allowing multiple subscribers to view the messages published by a transmitter on an Event Bus and take an action from it.

Some pointers:

- Events get sent whether or not receivers are listening

- Receivers don't acknowledge receipt of the message

- Systems that send events and others that receive the events don’t have dependencies on each other, except for the format of the message content.

- You can have multiple subscribers listening out for platform events and doing something when they occur.

- External apps publish events using the sObject (SOAP or REST) API and consume events using CometD clients

- Platform events are stored for 24 hours.

- You can retrieve stored events using API CometD clients but cannot in APEX, SOQL or SOSL, reports or list views.

The following are means of publishing a platform event:

- APEX Class

- Process Builder

- Lightning Flows

The following are means of subscribing/listening to a platform event:

- Lightning Component (Comet D)

- Visualforce Page (Comet D)

- Applications or Middleware that can use the Comet D protocol

- Process Builder

- Apex Trigger

Pattern 3 - Batch Data Sync - Salesforce Bulk API

Scenario

In this scenario we want to load a large volume of data into the Salesforce platform. We have over 50,000 records that we want to migrate to Salesforce and we want to do this in a controlled, efficient and monitored means.

Approach

The best means of doing this data migration / data integration is to leverage the Salesforce Bulk API. So how does the Salesforce Bulk API work???

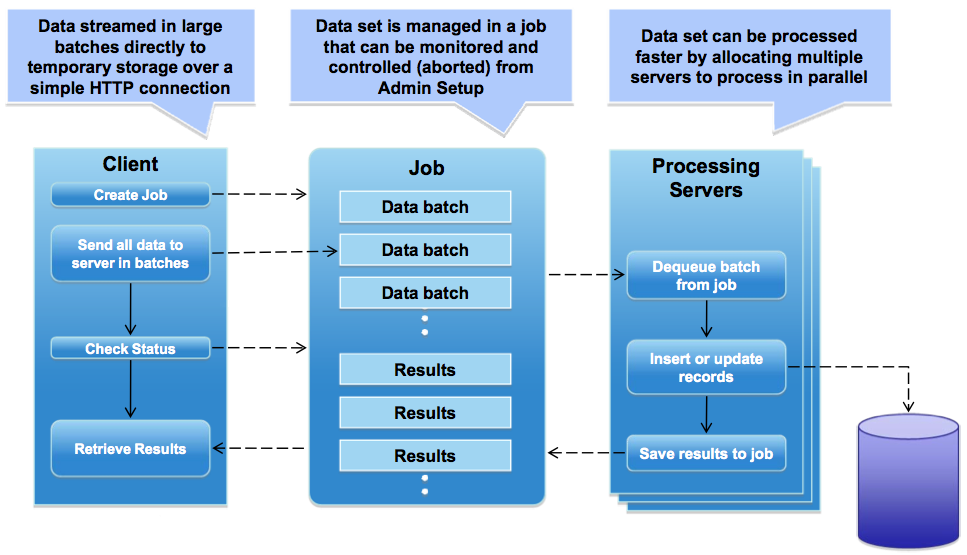

Bulk API Functionality

|

| Picture Courtesy: Salesforce.com |

- We write a Java Client Application or leverage a middleware solution such as Informatica, Jitterbit or Mulesoft.

- The client / middleware is responsible for dividing the data into batches of 10,000 records.

- The client/middleware invokes the Bulk API which firstly creates a new JOB record in Salesforce.

- The batches of data that the client has created are sent to the Salesforce server in the created batches and logged against the JOB record

- When the Salesforce processing servers are free, a batch is dequeued from the Job record and sent to the processing servers for procesing.

- The records are attempted to be inserted/updated in Salesforce, in chunks of 200 records.

- The results of the processing of a batch are stored against the JOB record in Salesforce

- A user can review against the Job record in Salesforce the status of the batches that are being processed.

Some Pointers

- Batches are held in temporary storage.

- Bulk API can process XML, JSON or CSV files

- Batches can be processed serially or in parallel.

- Your program can query the Job record asynchronously or in Salesforce directly to view the progress of the batches that are being uploaded.

- Failed records are attempted to be re-processed automatically.

- Batches move to shared processing servers, which are shared with other orgs as well.

- Each batch of say 10,000 records is processed in groups of 200 records to stay within governor limits.

- Batches Timeout after 10mins, so you may need to tweak the batch size if multiple processing (wflow, trigger, PB, assignment rules) is happening in the background.

Errors when using the Bulk API

- Errors can occur when trying to insert/update records using the Bulk API

- A batch can have a Completed state even if some records have failed.

- If a record fails due to an error returned from Salesforce it is not tried again.

- If a subset of records have failed, the batch is not rolled back.

- So you will have successful records being inserted/updated and other records marked as failed.

Processing Time/Retry for Batches

- 5 minute limit for process each chunk

- 10 minute limit for processing a single batch

- If it takes longer than 10 minutes to process a whole batch, the Bulk API places the remainder of the batch back into the queue for processing.

- If the batch continues to exceed 10 minutes on subsequent attempts, the batch is placed again to the back of the queue, and reprocessed up to 10 times before the batch is permanently marked as failed.

- Retry of a batch is only if it is taking too long to do - not because of record failure.

Summary

In this article I have run through some frameworks and patterns that can be used for integration use cases. These are ones that I would reocmmend based on the type of Integration that you are looking to perform. Would be great to hear about your practical experience on leveraging these approaches.

Thank you so much for providing information and throwing light on the most useful and important topics.

ReplyDeleteInformatica Read Rest Api

This comment has been removed by the author.

ReplyDeleteVery wonderful article. I liked reading your article. Very wonderful share. Thanks.

ReplyDeleteI want to introduce Salesforce Time Trackingwith Flowace

Salesforce Integration Approaches vary based on specific needs. FlowAce is an ideal choice for seamless integration, enhancing time tracking within Salesforce. Its automation and project recognition features make it a valuable tool for businesses seeking efficient and data-driven CRM integration.